The Brussels Effect 2.0: Building AI Across Borders Without Losing Our Minds (or Margins)

Designing for AI regulation isn’t a chore—it’s a moat. The EU just handed us the bridge.

Compliance, or Competitive Advantage?

We’ve all been here before—building fast, moving fast, and then being asked: “Is this GDPR-compliant?”

The answer is usually a shrug, a scramble, and a prayer.

Now it’s déjà vu all over again. The EU Artificial Intelligence Act was officially finalized in 2024 and will be fully enforceable by 2026. And for anyone building AI that touches Europe (or might someday), it’s not just a set of compliance hoops to jump through. It’s a strategic crossroads.

We can keep reacting. Alternatively, we can use this moment to gain a competitive advantage by building trust, strengthening governance, and potentially even increasing margins along the way.

What the EU AI Act Is

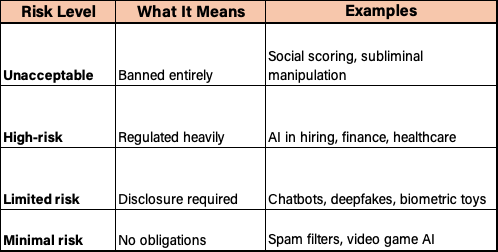

The EU AI Act is the first comprehensive legal framework for artificial intelligence globally. It regulates AI not by what it is, but by what it does, through a four-tier risk classification:

If you’re building in the high-risk category—and a lot of us are—you’re now looking at required controls for:

Risk management

Data and model governance

Human oversight

Logging and audit trails

Robust documentation and transparency

And here’s the kicker: It applies extraterritorially. If your AI touches an EU citizen, you’re in scope. Full stop.

📘 Source: European Commission – AI Act Press Release

Does the U.S. Have Anything Like This?

Not really. The closest we’ve come is Executive Order 14110 (October 2023), which targets frontier models like GPT-4 or Claude Opus. It emphasizes transparency, watermarking, red-teaming, and sharing safety results—but it’s more of a framework for cooperation, not a binding law.

Meanwhile, enforcement remains scattered:

FTC and DOJ handle consumer protection and competition

NIST offers voluntary guidance (like its AI Risk Management Framework)

HIPAA, FERPA, and CFPB fill sector-specific gaps

There’s no national AI law. Just a growing patchwork and numerous regulatory signals.

Executive Order 14110 – Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

Signed: President Joseph R. Biden, October 30, 2023.

Archived summary and context via Wikipedia

Signed: President Donald J. Trump, January 23, 2025.

Executive Order 14179, signed in January 2025, revokes EO 14110 and redirects federal agencies to prioritize American AI innovation, develop a national AI action plan, and reassess prior regulatory frameworks. I reference both orders to reflect a clear continuity of federal engagement with AI policy, while also highlighting the change in strategic direction.

The Emissions Analogy: Why We Might All Build for Europe Anyway

You know how automakers design for California emissions or Euro 6 standards—because it’s easier than building fifteen versions of the same car?

That’s what’s already starting to happen with AI.

Major platforms (think Microsoft, Salesforce, SAP) are quietly aligning with EU AI Act principles, not out of fear, but because it opens doors. Enterprise and government buyers now see trust and compliance as strategic differentiators.

If your AI product can say “EU AI Act–ready”, that’s not red tape. That’s a sales asset.

“Trust may soon be your best growth hack. Especially in healthcare, finance, and public sector AI.”

U.S. Sovereignty and Political Whiplash

Here’s where it gets tricky. Even if compliance makes business sense, it runs headlong into our favorite American reflex: regulatory sovereignty.

There’s already hand-wringing over “foreign interference in innovation.” The EU’s precautionary stance can feel paternalistic to a U.S. ecosystem that celebrates risk-taking.

The EU says: Prove your AI won’t hurt anyone before launch.

The U.S. says: Launch it, and we’ll sue you later if you mess up.

So yes, adopting EU rules may cause friction internally, especially with product, legal, or policy teams who bristle at being seen as taking orders from Brussels.

But this isn’t new. It’s The Brussels Effect (Bradford, 2020): EU regulation that slowly becomes the de facto global standard, even in places that never voted for it.

📘 Source: The Brussels Effect: How the European Union Rules the World, Anu Bradford, Oxford University Press

How to Navigate Without Losing Your Shirt (or Your Sanity)

This isn’t a call to re-staff startups with AI ethicists and policy wonks. It’s about starting smart—and giving yourself optionality later.

Practical steps to take:

Know your risk tier

If you’re in healthcare, HR tech, finance (incl. insurance), or edtech, assume “high-risk” until proven otherwise.

Build transparency in early

Start logging model decisions, data sources, and red-teaming exercises now. You’ll thank yourself later.

Design for toggles

Just like GDPR cookie banners, build features that can “comply up” for EU users while remaining lighter elsewhere.

Speak the language of trust

Don’t just say “we’re compliant”—say “we’re trustworthy.”

Companies like Salesforce and Microsoft do this well: they embed trust into their product messaging and architecture, not just their legal disclaimers.

This resonates especially in enterprise and public sector sales, where credibility and accountability often take precedence over raw speed.

Treat compliance like ISO or SOC2

It’s not a box-check. It’s a credibility asset. Trust sells.

If you’ve worked with data in regulated industries like healthcare or insurance, you already know this drill—now you’re just layering AI on top.

Profit Incentive

Many are still treating compliance like overhead instead of what it is: a pricing advantage.

“The companies that align with EU-style transparency early may get to charge more—not less—for AI.”

Let’s break that down.

If you’re a software vendor, and your AI system comes with explainability, governance controls, and documented safeguards, you’re no longer just offering a product—you’re offering risk mitigation. That changes how procurement teams evaluate you. You’re not the vendor that raises red flags—you’re the one that helps them sleep at night. That can justify:

Premium pricing

Faster security and compliance approvals

Longer-term enterprise and public sector contracts

Now, if you’re a services company—a systems integrator, MSP, or consultancy—the story changes, but the value doesn’t. You’re not selling a black box; you’re selling how to deploy AI responsibly. If your delivery approach bakes in documentation, oversight, bias mitigation, and EU-style governance, you become a trusted partner, not a risk multiplier. That earns you:

More credibility in regulated sectors

Better positioning in competitive bids

The ability to charge for strategy, not just staffing and implementation services

Whether you’re selling the software or delivering the implementation, trust sells. Especially in healthcare, life sciences, and the public sector, where questions like “Can we defend this?” matter more than “Is it cool?”

Compliance has costs. But treating it like a market signal instead of a drag can turn it into your edge, especially as regulatory and reputational risks become part of the buyer’s calculus.

Conclusion: Design for Trust, Compete on Confidence

The EU AI Act is a real, enforceable law that is already reshaping conversations far beyond Brussels. Like GDPR before it, it won’t just influence how AI is regulated—it will change how AI is bought, sold, and scaled globally.

But to me, this isn’t just a compliance headache—it’s a strategic inflection point.

For product companies, it’s a chance to turn transparency into a market differentiator, not just a line in a sales deck. For services firms, it’s a way to lead with credibility, build trust earlier in the deal cycle, and gain a strategic advantage in conversations. I’ve seen firsthand how these dynamics shift buyer behavior.

The companies that win won’t just build powerful AI. They’ll be the ones who can explain it, defend it, and sell it without apology—in any market, under any scrutiny.

This is where regulation stops being red tape and starts being a roadmap.

And frankly, I’d rather design for that future now than scramble to catch up later.

📚 Sources

European Commission. “Artificial Intelligence Act: EU rules to ensure trustworthy AI.” April 2021.

https://ec.europa.eu/commission/presscorner/detail/en/ip_21_1682

White House. “Executive Order on the Safe, Secure, and Trustworthy Development of Artificial Intelligence.” October 30, 2023.

Archived summary and context via Wikipedia

White House. “Executive Order 14179 – Removing Barriers to American Leadership in AI” January 23 2025.

Bradford, Anu. The Brussels Effect: How the European Union Rules the World. Oxford University Press, 2020.

https://global.oup.com/academic/product/the-brussels-effect-9780190088583

National Institute of Standards and Technology (NIST). “AI Risk Management Framework (RMF 1.0).”

Future of Life Institute. Implementing the European AI Act. Accessed June 2025.